News

January 27, 2025 R-AIF: Solving Sparse-Reward Robotic Tasks from Pixels with Active Inference and World Models has been accepted as an article at the 2025 International Conference on Robotics and Automation (ICRA), Original ArXiv link is here.

December 18, 2024 George (Zhizhuo) Yang, PhD and member of NAC, has successfully passed his (doctoral) dissertation defense.

September 16, 2024 Contrastive-Signal-Dependent Plasticity: Self-Supervised Learning in Spiking Neural Circuits (in-press) accepted as full article in Science Advances, Original ArXiv link is here.

November 6, 2023 A Robust Backpropagation-Free Framework for Images accepted as full article for publication in Transactions on Machine Learning Research (TMLR)

August 8, 2023 A Neuro-Mimetic Realization of the Common Model of Cognition via Hebbian Learning and Free Energy Minimization accepted as an article and talk for the AAAI 2023 FSS Symposium Series Integration of Cognitive Architectures and Generative Models (AAAI FSS); (Alex was nominated to give the plenary speech on behalf of the Cognition FSS Symposium)

May 5, 2023 The Neural Coding Framework for Learning Generative Models was recently chosen by the editors of Nature Communication to be featured in their collection of recent research and advances on Neuromorphic Hardware and Computing (this nice achievement appeared in RIT Newsmakers channel)

April 25, 2023 Zhizhuo Yang has successfully passed his dissertation proposal defense.

April 17, 2023 Hitesh Vaidya will be starting his internship at Meta Amazon this summer of 2023

April 6, 2023 Convolutional Neural Generative Coding: Scaling Predictive Coding to Natural Images accepted as full paper for publication at the Cognitive Science Society conference (CogSci 2023)

March 31, 2023 William Gebhart has been awarded the AWARE-AI fellowship

March 13, 2023 Spiking Neural Predictive Coding for Continual Learning from Data Streams accepted as full article for publication in Neurocomputing

February 24, 2023 Zhizhuo Yang will be starting his internship at Meta (Facebook) this summer of 2023

January 30, 2023 A Neural Active Inference Model of Perceptual-Motor Learning accepted as full article for publication in Frontiers In Computational Neuroscience

January 16, 2023 Active Predicting Coding: Brain-Inspired Reinforcement Learning for Sparse

Reward Robotic Control Problems accepted as full paper for publication at the 2023 IEEE International Conference on Robotics and Automation (ICRA 2023)

November 22, 2022 Large-Scale Gradient-Free Deep Learning with Recursive Local Representation Alignment accepted as full paper for publication at the Thirty-Seventh AAAI Conference on Artificial Intelligence (AAAI 2023)

September 14, 2022 Lifelong Neural Predictive Coding: Learning Cumulatively Online without Forgetting accepted as full paper for publication at the Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS 2022)

July 18, 2022 Maze Learning using a Hyperdimensional Predictive Processing Cognitive Architecture accepted as full paper for publication at the 15th Conference on Artificial General Intelligence (AGI 2022)

April 21, 2022 Our article The Neural Coding Framework for Learning Generative Models was chosen to appear in the Nature Communications Editors' Highlights for Applied Physics & Mathematics

April 12, 2022 CogNGen: Constructing the Kernel of a Hyperdimensional Predictive Processing Cognitive Architecture accepted as full paper for publication at the Cognitive Science Society conference (CogSci 2022), Original PsyArXiv link is: here

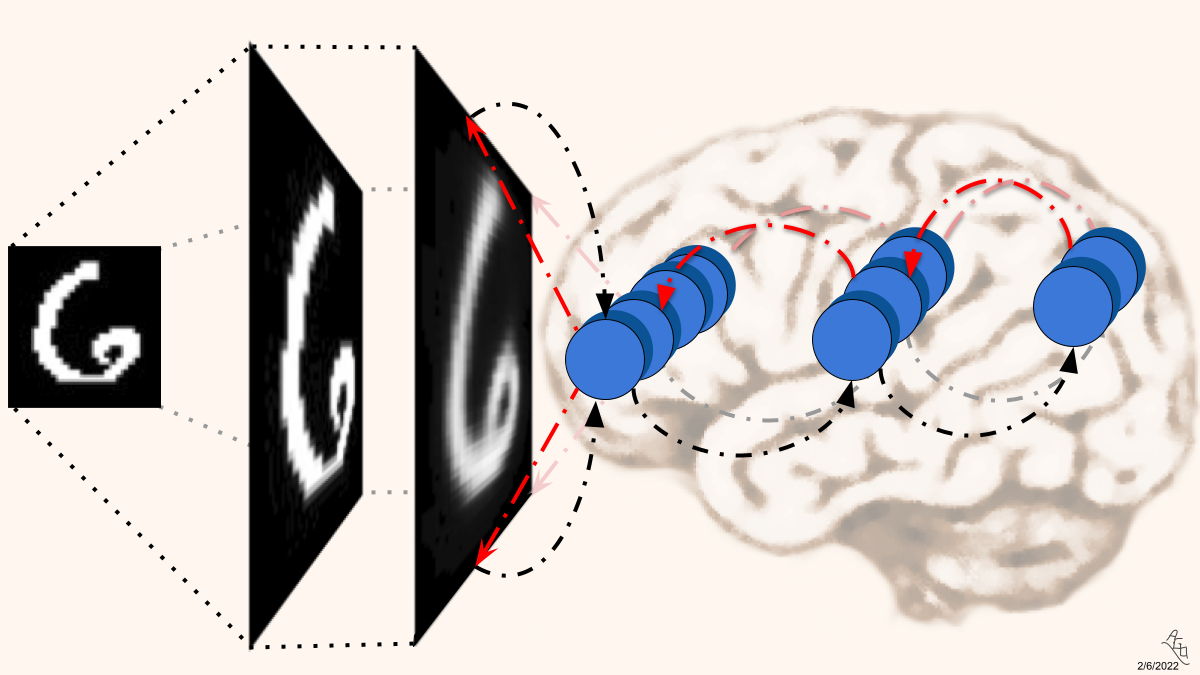

January 24, 2022 The Neural Coding Framework for Learning Generative Models accepted as full article in Nature Communications (NCOMMS 2022), Original ArXiv link is here.

December 1, 2021 Backprop-Free Reinforcement Learning with Active Neural Generative Coding, accepted for publication at the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI-22)

November 24, 2021 Hitesh Vaidya, MSc and member of NAC, has successfully passed his master's thesis defense.

November 18, 2021 Reducing Catastrophic Forgetting in Self Organizing Maps with Internally-Induced Generative Replay, accepted for publication as a student abstract at the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI-22)

May 15, 2021 Towards a Predictive Processing Implementation of the Common Model of Cognition, accepted for publication as an extended abstract at the International Conference on Cognitive Modeling (ICCM 2021)

December 27, 2020 An Empirical Analysis of Recurrent Learning Algorithms In Neural Lossy Image Compression Systems accepted as full paper for publication at the 2021 Data Compression Conference proceedings (DCC 2021)

December 15, 2020 Michael Peechat, MSc and member of NAC, has successfully passed his master's thesis defense.

December 9, 2020 AbdelRhaman Elsaid, PhD and member of NAC, has successfully passed his (doctoral) dissertation defense.

December 8, 2020 James Le, MSc and member of NAC, has successfully passed his master's thesis defense.

December 3, 2020 Recognizing and Verifying Mathematical Equations using Multiplicative Differential Neural Units, accepted for publication at the Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21)

March 20, 2020 Improving Neuroevolutionary Transfer Learning of Deep Recurrent Neural Networks through Network-Aware Adaptation accepted as full paper for publication at The Genetic & Evolutionary Computation Conference (GECCO 2020)

January 9, 2020 Three of my team's EvoStar papers accepted for publication to EvoStar 2020, including: The Ant Swarm Neuro-Evolution Procedure for Optimizing Recurrent Networks and An Empirical Exploration of Deep Recurrent Connections and Memory Cells Using Neuro-Evolution

December 26, 2019 Sibling Neural Estimators: Improving Iterative Image Decoding with Gradient Communication accepted as full paper for publication at the 2020 Data Compression Conference proceedings (DCC 2020)

June 25, 2019 Continual Learning of Recurrent Neural Networks by Locally Aligning Distributed Representations accepted as full article in IEEE Transactions on Neural Networks and Learning Systems (TNNLS 2019)

May 14, 2019 Like a Baby: Visually Situated Neural Language Acquisition accepted for publication at the 57th Annual Meeting of the Association for Computational Linguistics conference (ACL 2019)

March 20, 2019 Investigating Recurrent Neural Network Memory Structures using Neuro-Evolution accepted as full paper for publication at The Genetic & Evolutionary Computation Conference (GECCO 2019)

March 11, 2019 A Neural Temporal Model for Human Motion Prediction accepted for publication at the Conference on Computer Vision and Pattern Recognition (CVPR 2019)

December 27, 2018 Learned Neural Iterative Decoding for Lossy Image Compression Systems accepted as full paper for publication at the 2019 Data Compression Conference proceedings (DCC 2019)

October 31, 2018 Biologically Motivated Algorithms for Propagating Local Target Representations, accepted for publication at the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19)

|