In this area, we explore the reading of avatar faces.

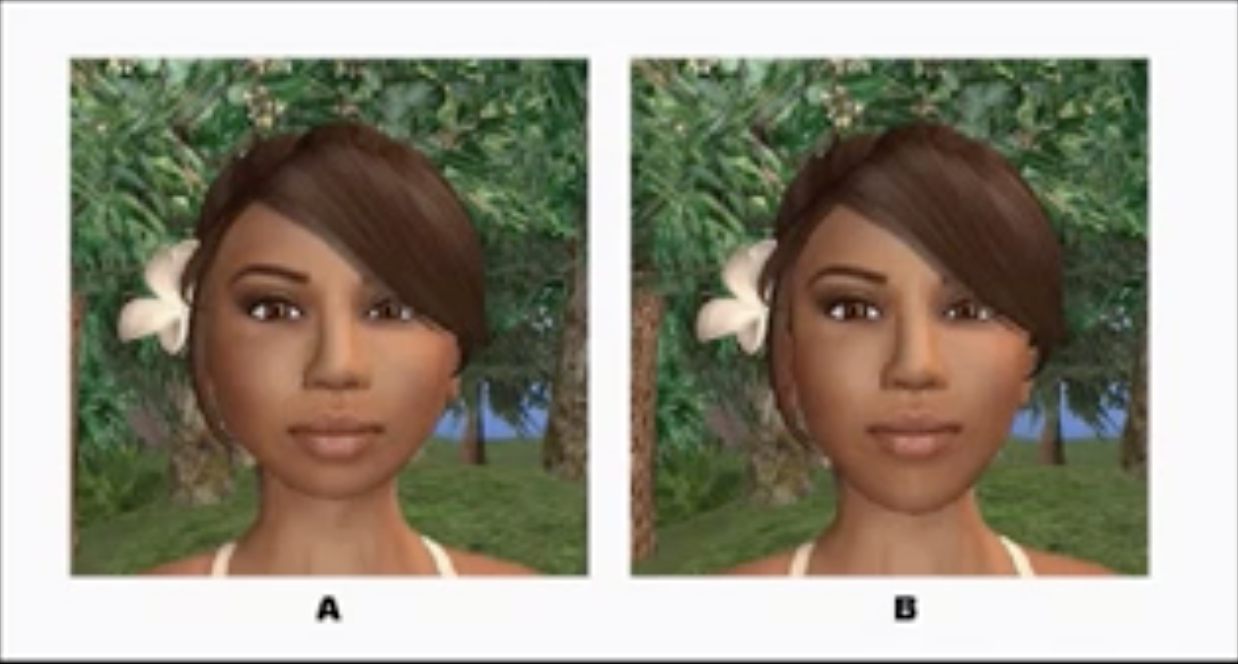

In the first work, we investigate whether we personality judgments when viewing an avatar’s face. Using SecondLife avatars as stimuli, we employ Paired- Comparison Tests to determine the implications of certain facial features. Our results suggest that people judge an avatar by its look, and such judgment is sensitive to eeriness and babyfacedness of the avatar.

In the second work, we explore the applications of facial expression analysis and eye tracking in driving emotionally expressive avatars. We propose a system that transfers facial emotional signals including facial expressions and eye movements from the real world into a virtual world. The proposed system enables us to address the questions: How significant are eye movements in emotion expression? Can facial emotional signals be transferred effectively, from the real world into virtual worlds?